Contents

1. Introduction

2. Related Work

3. Methodology

4. Results

5. References

Introduction

In today’s day and age, where most of our interaction is digital, the use of such a tool that we regularly use poses significant threat and harm to our society if done improperly, regardless of intent. Digital misinformation and disinformation has become so rampant in online cultures like social media and online news that it is listed by the WEF (World Economic Forum) as one of the main threats to human society [1].

Misinformation is false or inaccurate information, especially that which is intended to deceive. With the ongoing COVID-19 pandemic, in the present society more than ever, we must not only attempt to curb the spread of misinformation and disinformation, but we must attempt to design better systems with the intent of conveying clear concise facts to the public as fluently as possible from concerned and trustworthy bodies, this can be a monumental task given the fact that false news tends to spread quicker than true news [3].

To attempt to tackle this, we first analysed the possible reasons and factors for the spread of false news. This is then to be utilised to construct a survey to collect the relevant data in a specific selected user demographic through user research processes. The data from the research conducted will then be used to construct and design the guidelines and campaigns to combat the spread of misinformation and disinformation.

For the context of this research we chose to specifically focus on people within the age group of 18-23, namely students undergoing their undergraduate education. We attempted to have a varied user base for the research along different axes like gender, age, language, location and economic background. This project was done under the advice of Professor Dipanjan Chakraborty

Related Work

There are many possible reasons for why misinformation spreads and why people tend to accept some things over others, regardless of whether it is actually true or not. Being knowledgeable of these factors is the first step to combating them and designing better information systems. We went through related works regarding factors that contribute to the spread of misinformation as such and have listed them and their relevant sources below

Confirmation Bias : Confirmation Bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one’s prior beliefs or values [4]. If a person is shown two opposing pieces of information, that person will more often than not take the path of least resistance [2] as long as there is not a strong reason to do otherwise. Keeping this in mind, the more outlets of information cite their sources transparently and without ambiguity, the more likely a user is to consider the news in a complete manner

Echo chambers : Echo chambers are environments in which somebody encounters only opinions and beliefs similar to their own, and does not have to consider alternatives [5]. Echo Chambers builds on the concept of Confirmation Bias. Simply put, the more someone is exposed to similar types of opinions, the less they will accept and acknowledge anything to the contrary. The tricky part about these is that it’s not very easy to get out of echo chambers as one usually doesn’t realise when they are in one. A good indicator is whether most of the sources you get your information from either don’t portray the information in a complete way, or are frequently biased in a consistent way .The more someone gets their information from sources that deal in biases that echo the reader’s own biases, the more likely that person will be to believe something false from that source.

Social Influences and Value Sensitive information : A big factor for people’s ability to take in news is their emotion. News that plays to certain values may either appeal to someone particularly more than others or turn them away more than others, especially if the news pertains to values that the person holds close. For example, one who holds money and material things very dearly may react easily to fake news that targets a rumor of a scam, regardless of its truth or not. It has been found that in emotional states, people are more likely to spread the news that put them in such a state [6], studies have shown that when experiencing high emotions, one is also more susceptible to believing fake news [7]. This is also related to a field of design known as Value Sensitive Design (VSD) which basically takes into account the different values users may have for designing for them. If more news and information sources took these into account and constructed the information in more neutral ways, then the chance of news being spread purely out of emotion would decrease.

Colour and Font : While not an active contributor to how misinformation spreads, A big part of the study of misinformation and disinformation is how it attracts people in the first place. People are much more likely to have their visual attention influenced by non black and white colours and are more likely to be drawn towards bright bouder colours than dull colours [8], [9]. We can also gauge to try to see if the loudness or brightness of a colour influences the trust someone associated with it compared to a black and white coloured one or a duly coloured one.

Clickbait factor and Wording : The term clickbait refers to a form of web content that employs writing formulas and linguistic techniques in headlines to trick readers into clicking links. Similar to the previous variable, while maybe not a direct contributor, how a piece of information is presented, or how its title is worded may have an indirect influence on whether the user even reads that piece or not [10], and on whether they share it or not.

Others : These factors were mainly discussed on our own and are novel/minor additions. The presence of a well known figure as a source could potentially affect someone’s acceptance of news. The language the news is presented in might be a factor as well, based on whether the user is comfortable with that language or not.

Methodology

The problem statement was first analysed and relevant literature was studied to fully understand the reasons and factors contributing to the spread of misinformation. With these factors and variables in hand, they were used as a map to make questions for a questionnaire based user survey to gather data.

The questionnaire was divided into two broad sections.The first, a section for data about the user, where they get their information from, how much they share information with others, and how connected they are with respect to social media in general.The questions were designed to also try to see how varied the users sources were in an attempt to see how susceptible they might be to echo chambers. The questions in the first section are a mixture of objective and subjective self gauging questions.

The second section is meant to actually gauge how susceptible the user is to sharing things based on a set of headlines which vary across the set of variables we defined after the literature review, and to determine whether these results fall in line with our assumptions or make a set of guidelines based on the novel response data we get. The section would consist of headlines that mapped to a variable from the set that was determined to contribute to the spreading of false news. For each variable, there would usually be two sets of opposing headlines along the variable, sometimes one would be true and one would be false. For example, for a clickbait factor, a set of true and fake headlines for clickbait and non-clickbait headlines were constructed. A table below has been given to give a better idea on which variables we tested and how the set of headlines varied across them. The users were given questions about how much trust they attributed to the headlines as well as what action they would take (Like sharing to someone, ignoring and so on). The results from the survey will be used to construct guidelines for better information system design.

| Variable | Variation | Both true and fake headlines given? (Yes or No) |

|---|---|---|

| Font and colour | Professional font in black and white colour as opposed to a playful font in loud colours | No |

| Clickbait factor | One headline written in a clickbait manner to and another with the same content written in a neutral manner | Yes |

| Nuance and divisiveness | A set of three headlines were written with different stances on a recent government initiative. Two were written in a conclusive way (saying that the initiative was good or bad) and one was written in a nuanced and neutral manner. | No. |

| Believability | Two sets of fake headlines. One that is written trying to use science as a basis, the other with no sense of proof in an unfounded manner. | No |

| Wording and vocabulary | One headline written using technical terms and one written in an easy to understand manner. | No |

| Characters | A set of quotes, each by different sources. One is from an unspecified source, the other is from a well known source or person | No |

| Value sensitive - Faith | Two memes were generated, one that directly appealed to a sense of faith in a person and the other does not. | No |

| Value sensitive - Money | One headline directly mentions a monetary value, the other is one that just mentions an insurance plan | No |

| Value sensitive - Family | Two headlines were written, one which mentions a remedy to protect the reader's family, and the other does not mention of family. | No |

Before releasing the survey to a select demographic using snowball sampling, there was an initial survey given to a few people just to see how long the survey was taking and to smooth out any rough edges to the quality of the survey like wording and more. There was also a copy of the survey translated into Hindi from English to help further its reach to other demographics that may not be fluent in English. The English survey was then released to a series of student demographics.

Results and Future Scope

Currently, the English survey has around 30 responses. Using the data gathered from these forms, we plan to construct a set of comprehensive factual information campaigns with the purpose of empowering information and combating disinformation.

Summary of the user data :

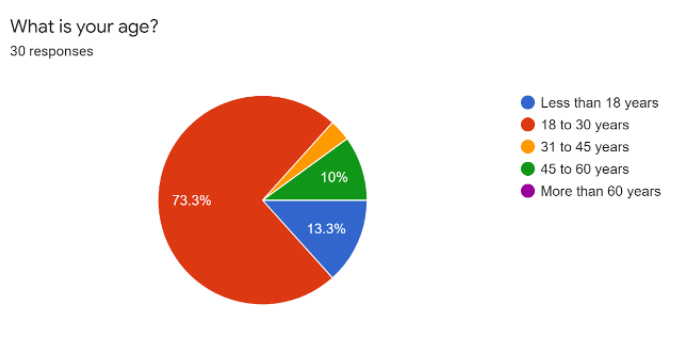

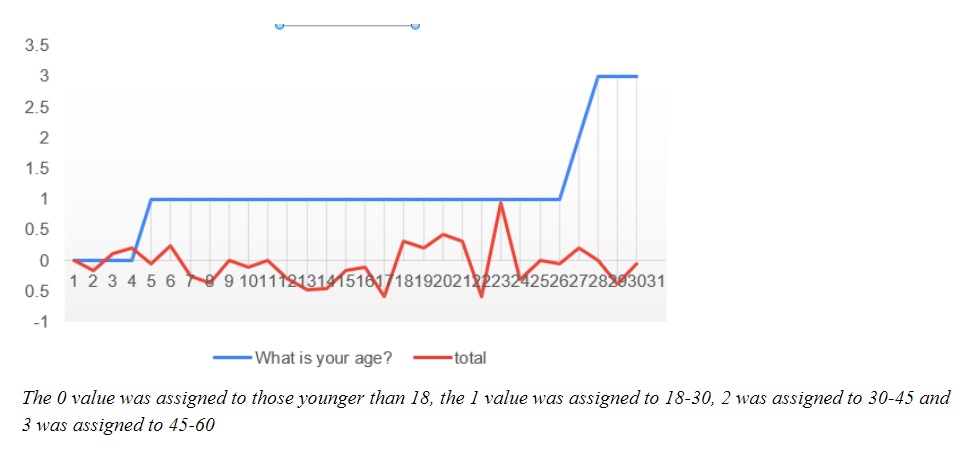

- Out of the 30 responses, 22 were of the age group 18-30, 4 were less than 18, 3 were of the age group 45-60 and 1 was in the age group 30-45.

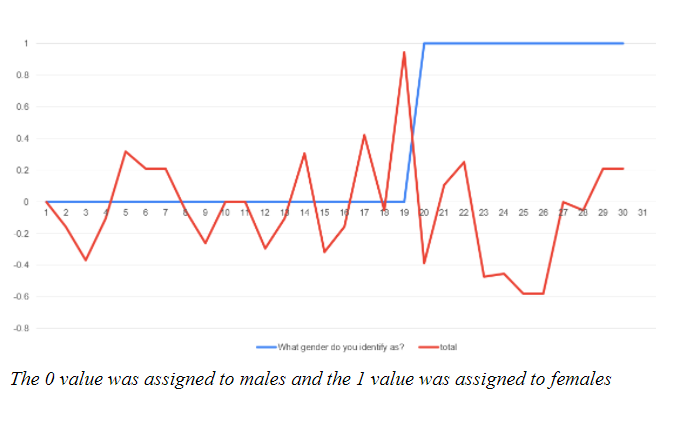

- 19 of the participants were male, and 11 of them were female.

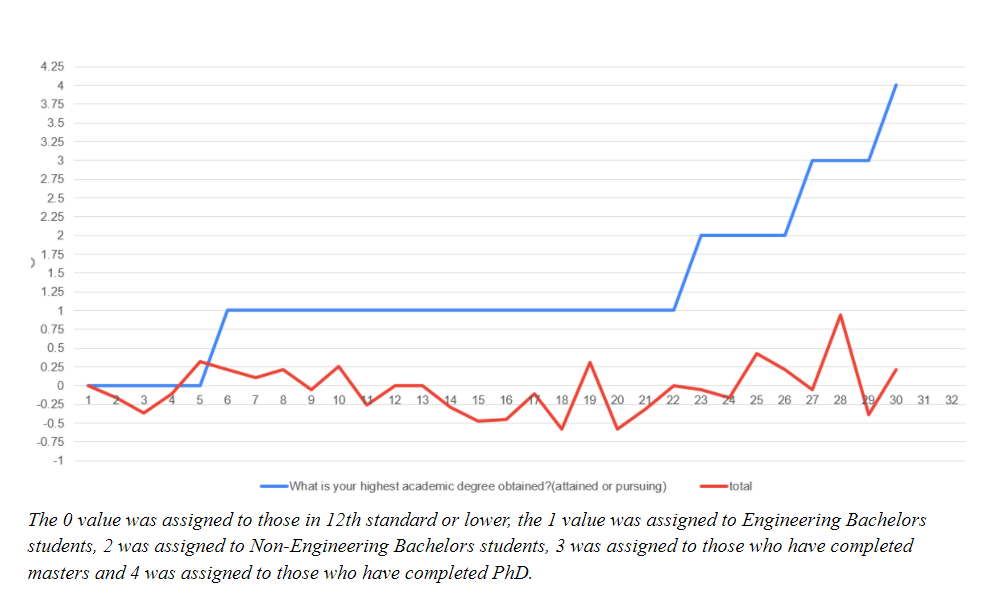

- All of the participants had completed or were undergoing bachelors with 9 having studied further.

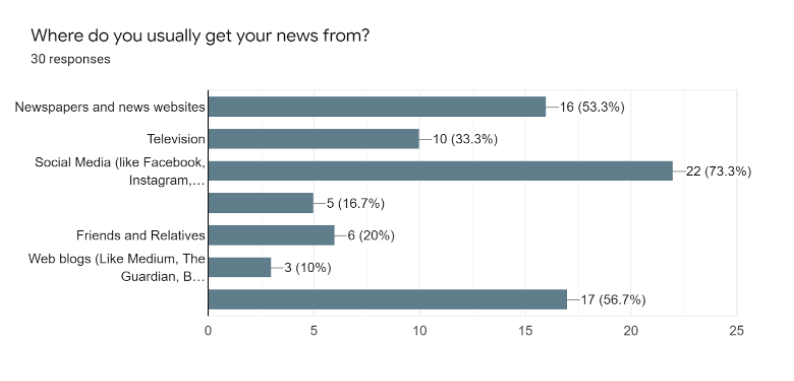

- 25 of the users identified as frequent users of social media and 22 cited it as a source of news for them.

- The most used social media applications by the users were WhatsApp, Instagram, YouTube and Facebook in descending order.

Analysis of the data : For each headline or piece of information of the second section, we gave the users four options to associate a level of trust with the article of information : high, moderate, low and depending on the source. We assigned a numerical value of 1, 0.5,-1 and 0 to them respectively if that option was chosen. The total values for each user were summed and averaged to give what we term as the trust value of that user. We graphed these values for the users against certain variables of the users, namely age, gender and education as of now.

From the data gathered from the users, we noticed that on average, males tended to have a higher trust value than females. We also found that the lowest trust values tended to land in the age group of 18-30, perhaps this is due to their abundance of participation in social circles, both online and offline.

Conclusion and Future Scope : The biggest tasks ahead as of right now are mainly gathering more data, it is difficult to make conclusive correlations from small sample sizes. We plan to not only broaden the reach of the original survey by distributing the Hindi translated survey, but also by splitting the variables in the second section to make a set of forms that we can distribute and gather more responses with, considering that the forms in this set will be of shorter length compared to the original forms used. These forms would be distributed using a randomizer on a simple HTML page to hand out the link of one of the google forms in the set, which is still in development. Once we have enough data, analysing it using advanced statistical methods and utilizing the results to construct guidelines and theories to design around to combat the spread of disinformation would be the final step.

We were only able to study simple factors in our limited time, but we aim to eventually aim to study the correlations of all our tabulated factors in the future.

References

[1]https://reports.weforum.org/global-risks-2018/digital-wildfires/

[2]Del Vicario, Michela, Alessandro Bessi, Fabiana Zollo, Fabio Petroni, Antonio Scala, Guido Caldarelli, H. Eugene Stanley, and Walter Quattrociocchi. “The spreading of misinformation online.” Proceedings of the National Academy of Sciences 113, no. 3 (2016): 554-559.

[3]Vosoughi, Soroush, Deb Roy, and Sinan Aral. “The spread of true and false news online.” Science 359, no. 6380 (2018): 1146-1151.

[4]Nickerson, Raymond S. “Confirmation bias: A ubiquitous phenomenon in many guises.” Review of general psychology 2, no. 2 (1998): 175-220.

[5]https://www.oxfordlearnersdictionaries.com/us/definition/english/echo-chamber

[6]Martel, Cameron, Gordon Pennycook, and David G. Rand. “Reliance on emotion promotes belief in fake news.” Cognitive research: principles and implications 5, no. 1 (2020): 1-20.

[7]Brady, William J., Julian A. Wills, John T. Jost, Joshua A. Tucker, and Jay J. Van Bavel. “Emotion shapes the diffusion of moralized content in social networks.” Proceedings of the National Academy of Sciences 114, no. 28 (2017): 7313-7318.

[8]Singh, Nayanika, and S. K. Srivastava. “Impact of Colors on the Psychology of Marketing — A Comprehensive over View.” Management and Labour Studies 36, no. 2 (May 2011): 199–209.

[9]Kim, Dae-Young. “The interactive effects of colors on visual attention and working memory: In case of images of tourist attractions.” (2010)

[10]Scacco, Joshua M, and Ashley Muddiman. “The Curiosity Effect: Information Seeking in the Contemporary News Environment.” New Media & Society 22, no. 3 (March 2020): 429–48.