Motivation

The main motivation of the research conducted is to explore the viability of crowdsourcing in areas of poverty and low literacy. Crowdsourcing is not viewed as viable in such areas, primarily due to illiteracy and availability and knowledge of digital resources. This is more relevant than ever due to the growing penetration of digital devices and internet connectivity throughout the population. The study is conducted within the context of the Digital India mission, which mandates the digitization of handwritten government documents. This initiative in particular is relevant due to the fact that industry level OCR technology cannot be used for local languages, which makes the target demographic for the study ideal to contribute.

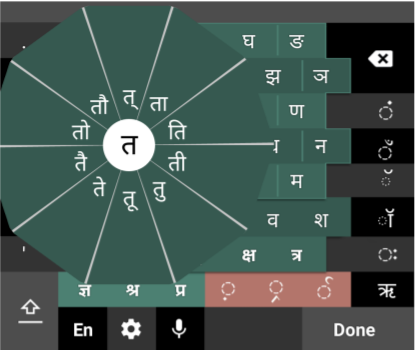

Application Design

The main motivation of the research conducted is to explore the viability of crowdsourcing in areas of poverty and low literacy. Crowdsourcing is not viewed as viable in such areas, primarily due to illiteracy and availability and knowledge of digital resources. This is more relevant than ever due to the growing penetration of digital devices and internet connectivity throughout the population. The study is conducted within the context of the Digital India mission, which mandates the digitization of handwritten government documents. This initiative in particular is relevant due to the fact that industry level OCR technology cannot be used for local languages, which makes the target demographic for the study ideal to contribute.

User Study

The study was conducted within the context of rural India, namely an initial two-week user study in Amale, Maharashtra, and a second study in Soda, Rajasthan, both low-resource villages in India. The study in Amale was primarily to test the usability of the application and to determine if there were any changes necessary. Namely increasing the age range of the participants, making the corpus truly handwritten this time instead of words in a similar font and trying to lower the learning curve for the swarachakra keyboard which participants had trouble using for complex words. The dataset was a set of pictures of handwritten Devanagari words.

The database was preprocessed as well, adding labels to the words being the most significant change. The study in Soda was conducted with 32 people (25 men and 7 women), with 21 of the participants having prior experience with a smartphone. There was a one hour training session to practice digitising to get comfortable with the system. The participants were given 6000 words and were given guidance for the first two days after which the researchers left. After 2 weeks the results were obtained, the participants were paid (250INR a day, 0.5 INR per correct word), and a bonus of 1500 for the three most accurate participants. It is important to note that for both studies the amounts paid were above the minimum wage.

Results

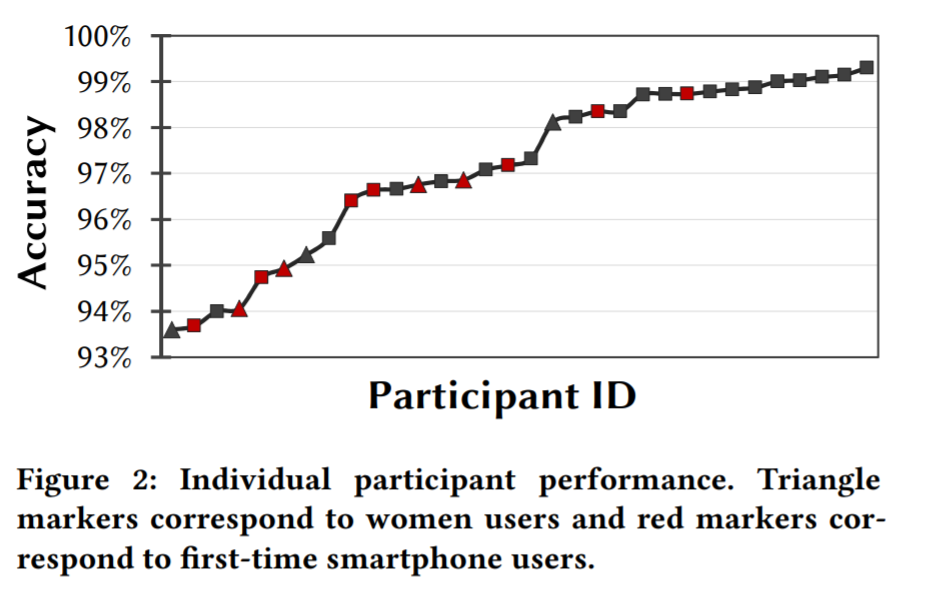

The average accuracy of the participants was 96.7%, after accounting for some error in labels, with a minimum of 93%. To test the feasibility of this model and compare the results, a market alternative, a transcription service was given the same number of words from the same dataset with a cost of 1INR per word to digitise. The accuracy of the firm was 98.4% assuming all of the systematic errors can be fixed. Interviews were also conducted with the participants individually as well. The participants found it a novel experience to work on, it also served as a reprieve from their more physically demanding sources of income, the flexible work hours also encouraged them to work on it. Participants said that this raised their confidence in typing in Hindi and have consequently started using it in their community Whatsapp groups. The study ended up becoming a communal activity, both in a sense of camaraderie in helping each other and friendly competition amongst themselves. However one case was recorded of a participant working unhealthy hours on the work.

Quantitatively, these results portray the viability of crowdsourcing in the target demographic. The paper goes on to detail the limitations of the research, namely the effect that the novelty of the work might have had on the users, increasing workflow, and the potential inefficiency of in-person training at larger scales, and the assumption of literacy of the users in local languages. The researchers recommend an implementation of a reminder system to prevent unhealthy work sessions and think that including elements of playfulness can bolster productivity in such environments.

Critique and Opinion

For the most part, I found the paper incredibly complete and thorough, limitations and drawbacks with the research methods were addressed; for example, the ethical concerns surrounding crowdsourcing. Their reasoning was clearly explained for the decisions made during the study.

However i felt a few things could have been done differently, for example, i feel that while they made an effort to expand the age range for the users, 38 still seems like too low of a ceiling, an older population, having more time on their hands could potentially be more susceptible to participating in crowdsourced work. It also would be substantially easier for younger people to learn and grasp the work given in this study, so seeing how older participants complete the work quantitatively and qualitatively would have been useful and relevant to the study. I would have taken a larger age range while conducting this research. While I felt that the communal and group aspect of the research showcased how competitiveness and cooperation could boost productivity in a work environment, it brings up a bias in favor of the user’s environment when comparing it with a professional transcription firm. Most crowdsourcing is isolated work. It would have been a more complete and unbiased environment if the users were given work in relative isolation, where their work was not affected positively or negatively by other users. I also would have tried to implement a digital training system as in-person training is not always feasible, this would also lead to less bias during the interviews. Implementing an element of gamification would have been an effective way to get people more comfortable with unfamiliar tasks, this could have boosted the accuracy and productivity of the group. Simply comparing with one transcription firm not immune to errors, it would have been more complete to compare the user data with more than one market alternative for the crowdsourced work.

I found the biggest takeaway from this paper to be the way communal values are integrated into the introduction of a novel system to a community. When values close to the community are present in the product or study, there seems to be a higher sense of participation, and a maximisation of the use of the designed system. In this context, people were more empowered and excited to participate due to the fact that not only did the work make them feel on par with the higher standards of digital literacy, but they were able to do so in their local languages. There is a concept related to this form of design called Value Sensitive Design which touches upon a similar process, and if design is done in the future keeping in mind the context and values of the users being designed for, then perhaps we can see similar effects of better usage and utility from the user side.